Deep learning, a subfield of artificial intelligence (AI) and machine learning, has revolutionized numerous industries. By mimicking the structure and function of the human brain, deep learning models excel at tasks like image recognition, natural language processing, and speech recognition. Python, with its extensive ecosystem of libraries and beginner-friendly syntax, has become the de facto language for deep learning development. This comprehensive guide delves into the world of deep learning with Python, exploring core concepts, essential libraries, and practical applications.

What Is Deep Learning?

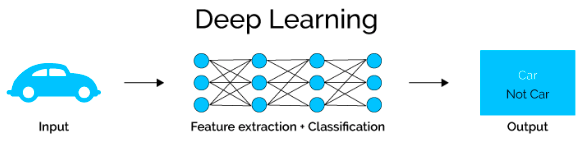

Deep Learning is Machine Learning Technique That Learn Features And Task Directly From Data. Data Can be Images, Text Or Sound. But deep Learning Concepts can be use other types of data. Deep Learning is often refer to end to end learning. DeepLearning big impact in areas such as computer vision and natural language processing.

In this example, have a set of images and I want to recognize which category of objects each image belongs to cars trucks or boats. start with a label set of images or training data the labels correspond to the desired outputs of the task. the deep learning algorithm needs these labels as they tell the algorithm about the specific features and objects in the image the deep learning algorithm. then learns how to classify input images into the desired categories we use the term end to end learning because the task is learned directly from data.

Deep Learning: Building Blocks and Architectures

Before diving into code, let’s establish a foundation in core deep learning concepts:

Artificial Neural Networks (ANNs): Inspired by the human brain, ANNs consist of interconnected layers of artificial neurons. These neurons process information, learn from data, and gradually improve their performance.

Activation Functions: These functions introduce non-linearity into the network, allowing it to learn complex patterns. Common activation functions include sigmoid, ReLU (Rectified Linear Unit), and tanh.

Loss Functions: These functions measure the difference between the model’s predictions and the actual labels. Popular loss functions include mean squared error (MSE) for regression tasks and cross-entropy for classification tasks.

Optimization Algorithms: These algorithms adjust the weights and biases of the network to minimize the loss function and improve the model’s performance. Common optimization algorithms include gradient descent and its variants like Adam and RMSprop.

Deep Learning Architectures:

Deep learning leverages various architectures to tackle diverse tasks. Here are some prominent ones:

- Multi-Layer Perceptrons (MLPs): These are basic ANNs with multiple hidden layers stack between the input and output layers. They are versatile and can be applied to various classification and regression problems.

- Convolutional Neural Networks (CNNs): These architectures excel at image recognition and computer vision tasks. CNNs utilize convolutional layers to extract features from images and pooling layers to reduce dimensionality.

- Recurrent Neural Networks (RNNs): RNNs are design to handle sequential data like text or time series. They have internal memory that allows them to process information from previous steps in the sequence.

- Long Short-Term Memory (LSTM) Networks: A specific type of RNN, LSTMs are adept at handling long-term dependencies in sequential data, overcoming vanishing gradient problems that can plague traditional RNNs.

These are just a few fundamental concepts and architectures in deep learning. As you delve deeper, you’ll encounter more complex models and applications.

Essential Python Libraries for Deep Learning Exploration

Python boasts a rich set of libraries specifically designed for deep learning tasks. Here are some of the most popular ones:

TensorFlow: Developed by Google, TensorFlow is a powerful and versatile open-source library offering high flexibility for building and deploying deep learning models. It provides low-level control for experienced developers and higher-level APIs like Keras for a more user-friendly experience.

Keras: A high-level API built on top of TensorFlow or other backends like Theano, Keras offers a simpler and more intuitive syntax for building and training deep learning models. It abstracts away some of the complexities of low-level libraries, making it a great choice for beginners.

PyTorch: Another popular open-source library, PyTorch is known for its dynamic computational graph and ease of debugging. It provides a more Pythonic feel compared to TensorFlow and is gaining traction in the deep learning community.

Scikit-learn: While not strictly a deep learning library, scikit-learn offers valuable tools for data preprocessing, machine learning model selection, and evaluation, which are crucial steps in any deep learning project.

The choice of library depends on your specific project requirements, desired level of flexibility, and personal preferences.

Artificial Neural Networks

What is Artificial Neural Networks ?

Artificial Neural Networks is uses algorithms inspired by the structure and function of the brains neural networks as such the models used in deep learning are called artificial neural networks.

How Artificial Neural Networks work?

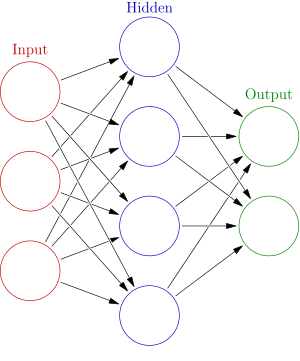

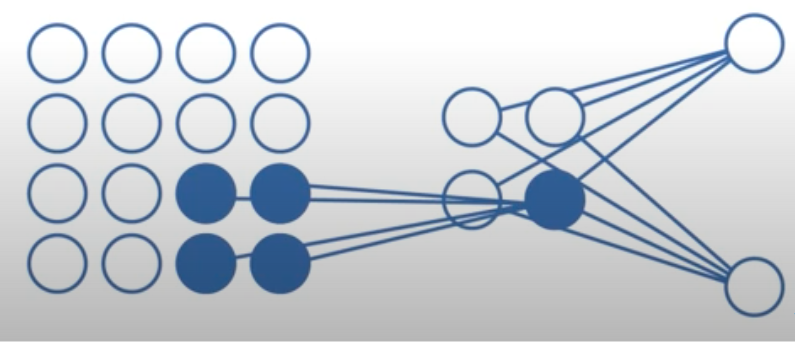

artificial neural networks are computing systems that are inspired by the brains neural networks. these networks are based on a collection of connected units called artificial neurons or simply just neurons each connection between these. neurons can transmit a signal from one neuron to another and the receiving neuron. then processes the signal and then signals downstream neurons connected to it. typically neurons are organized in layers different layers may perform different kinds of transformations on their inputs and signals essentially travel from the first layer called the input layer to the last layer called the output layer. any layers between the input and output layers are called hidden layers.

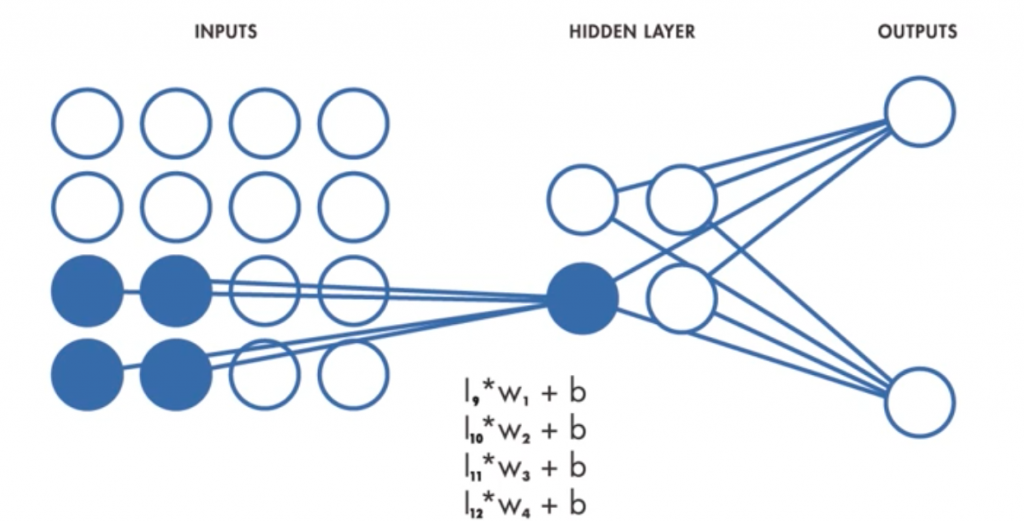

In This Above Image, first layer would be called the input layer. so these would all be our inputs they would then be transferred to a hidden layer here. now, this hidden layer has four different neurons. each one of these nodes here illustrates a neuron and them together within this column represent a layer since this layer is between the input and output layer it is a hidden layer. and then from this hidden layer these nodes transmit signals down to the nodes in the output layer.

# make keras model model = Sequential() model.add(Dense(12, input_dim=8, activation='relu')) #first hidden layer has 12 nodes and uses the relu activation function model.add(Dense(8, activation='relu')) #second hidden layer has 8 nodes and uses the relu activation function model.add(Dense(1, activation='sigmoid')) #output layer has one node and uses the sigmoid activation function # compile keras model #loss argument for cross entropy #binary_crossentropy For binary classification problems #adam is efficient stochastic gradient descent algorithm because automatically tunes itself for betterresults model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy']) # fit model #epochs is hyperparameter, set no of times learn entire data. #batch_size is hyperparameter, no of sample process before model updated. model.fit(X, y, epochs=150, batch_size=10,verbose=0)

Full Code: Click Hear

Convolutional Neural Networks

What is Convolutional Neural Networks?

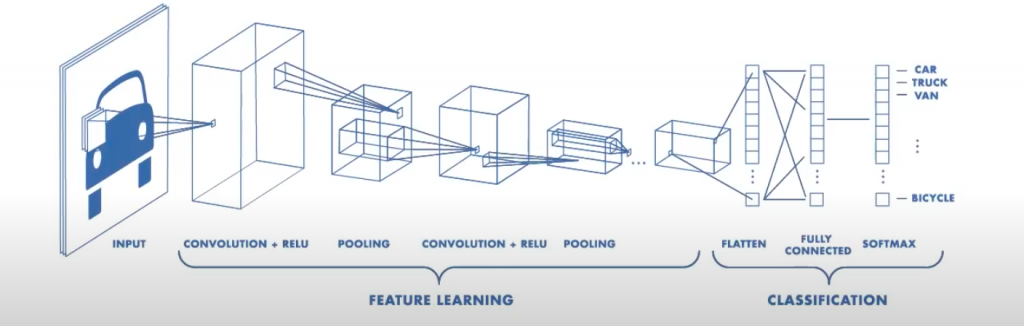

Convolutional Neural Networks is especially well-suite for working with image data. the term deep usually refers to the number of hidden layers in the neural network. while traditional neural networks only contain two or three hidden layers. this one popular type of deep neural network is known as a now days CNN.

How Convolutional Neural Networks Work?

CnN can have tens or hundreds of hidden layers they need to learn to detect different features in an image in this feature map. We can see that every hidden layer increases the complexity of the learned image features.for example the first hidden layer learns how to detect edges and the last learns how to detect more complex shapes. neural network the final layer connects every neuron from the last hidden layer to the output neurons.

CNN Works On three key concepts. Frist local receptive fields, second shared weights and biases and third is activation and pooling.

local receptive fields

local receptive fields in a typical neural network each neuron in the input layer is Connect to a neuron in the hidden layer however in a CnN. Only a small region of input layer neurons connect to neurons in the hidden layer. These regions are refer to as local receptive fields. The local Receptive field is translated across an image to create a feature map from the input layer to the hidden layer neurons. You can use convolution to implement this process efficiently. That’s why it is call a convolutional Neural Network.

shared weights and biases

shared weights and biases in The model learns these values during the training process and it continuously updates them with each new training. Cnn the weights and bias values are the same for all Hidden neurons in a given layer. this means that all hidden neurons are detecting the same feature. Such as an edge or a blob in different regions of the image. this makes the Network tolerant to translation of objects in an image.

activation

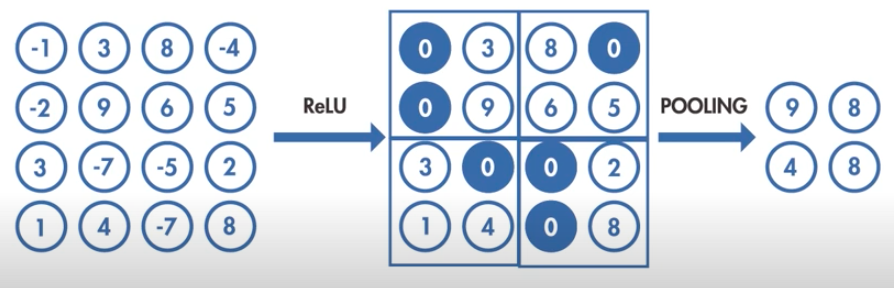

activation : the activation Step apply the transformation to the output of each neuron by using activation functions. Rectified linear unit (relu) is an example of a commonly use activation function. it takes the output of a neuron and maps it to the highest positive value or If the output is negative the function maps it to zero

Pooling reduces the dimensionality of the features map by condensing the output of small regions of neurons into a single output

This helps simplify the following layers and reduces the number of parameters that the model needs to learn now. Let’s pull it all together

CNN Code

#define variable batch_size = 64 epochs = 20 num_classes = 10 fashion_model = Sequential() #Conv2D() define as Convolutional Neural Networks fashion_model.add(Conv2D(32, kernel_size=(3, 3),activation='linear',input_shape=(28,28,1),padding='same')) fashion_model.add(LeakyReLU(alpha=0.1)) fashion_model.add(MaxPooling2D((2, 2),padding='same')) fashion_model.add(Conv2D(64, (3, 3), activation='linear',padding='same')) fashion_model.add(LeakyReLU(alpha=0.1)) fashion_model.add(MaxPooling2D(pool_size=(2, 2),padding='same')) fashion_model.add(Conv2D(128, (3, 3), activation='linear',padding='same')) fashion_model.add(LeakyReLU(alpha=0.1)) fashion_model.add(MaxPooling2D(pool_size=(2, 2),padding='same')) fashion_model.add(Flatten()) fashion_model.add(Dense(128, activation='linear')) fashion_model.add(LeakyReLU(alpha=0.1)) fashion_model.add(Dense(num_classes, activation='softmax')) #Compile the Model fashion_model.compile(loss=keras.losses.categorical_crossentropy, optimizer=keras.optimizers.Adam(),metrics=['accuracy'])

Full Code: Click Hear

Code Examples for Building Deep Learning Models

Let’s solidify our understanding with some code examples using popular Python libraries:

1. Building a Simple MLP with Keras:

from tensorflow import keras

# Define the model architecture

model = keras.Sequential([

keras.layers.Dense(10, activation='relu', input_shape=(784,)), # Hidden layer with 10 neurons and ReLU activation

keras.layers.Dense(10, activation='softmax') # Output layer with 10 neurons and softmax activation for classification

])

# Compile the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Train the model (replace with your actual training data)

model.fit(X_train, y_train, epochs=10)

# Evaluate the model (replace with your actual test data)

test_loss, test_acc = model.

# Define the model architecture

model = keras.Sequential([

keras.layers.Dense(10, activation='relu', input_shape=(784,)), # Hidden layer with 10 neurons and ReLU activation

keras.layers.Dense(10, activation='softmax') # Output layer with 10 neurons and softmax activation for classification

])

# Compile the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Train the model (replace with your actual training data)

model.fit(X_train, y_train, epochs=10)

# Evaluate the model (replace with your actual test data)

test_loss, test_acc = model.evaluate(X_test, y_test)

# Print the test accuracy

print(f"Test Accuracy: {test_acc:.4f}")This code snippet demonstrates building a simple Multi-Layer Perceptron (MLP) for image classification using Keras. The code defines the model architecture with a hidden layer of 10 neurons and a softmax activation function in the output layer suitable for multi-class classification problems. It then compiles the model with an Adam optimizer, categorical cross-entropy loss function (suitable for multi-class classification), and accuracy metric. Finally, the code trains the model on training data (X_train, y_train) and evaluates its performance on unseen test data (X_test, y_test), printing the test accuracy.

2. Building a CNN for Image Classification with TensorFlow:

import tensorflow as tf

# Define the model architecture

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)), # Convolutional layer with 32 filters, 3x3 kernel, ReLU activation

tf.keras.layers.MaxPooling2D((2, 2)), # Max pooling layer with 2x2 window

tf.keras.layers.Flatten(), # Flatten the output from the convolutional layers

tf.keras.layers.Dense(128, activation='relu'), # Hidden layer with 128 neurons and ReLU activation

tf.keras.layers.Dense(10, activation='softmax') # Output layer with 10 neurons and softmax activation

])

# Compile the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Train the model (replace with your actual training data)

model.fit(X_train, y_train, epochs=10)

# Evaluate the model (replace with your actual test data)

test_loss, test_acc = model.evaluate(X_test, y_test)

# Print the test accuracy

print(f"Test Accuracy: {test_acc:.4f}")This code snippet showcases building a Convolutional Neural Network (CNN) for image classification with TensorFlow. The code defines a CNN architecture with a convolutional layer followed by a pooling layer to extract features from images. The flattened output is then fed into fully-connected layers for classification. The model is compiled with an Adam optimizer, categorical cross-entropy loss, and accuracy metric. Similar to the MLP example, the model is train and evaluate on training and test data, respectively.

These are just basic examples to illustrate the process of building deep learning models with Python libraries. As you progress, you’ll explore more complex architectures, hyperparameter tuning techniques, and advanced training methodologies.

Future: The Evolving Landscape of Deep Learning

The field of deep learning is constantly evolving, with new research and advancements emerging at a rapid pace. Here are some exciting trends to watch out for:

- The Rise of Explainable AI (XAI): As deep learning models become more complex, there’s a growing emphasis on developing XAI techniques to understand how these models arrive at their decisions. This is crucial for fostering trust and transparency in deep learning applications.

- Generative Deep Learning: This subfield focuses on models that can generate new data, like images, text, or music. Generative models have applications in creative content generation, drug discovery, and anomaly detection.

- Deep Reinforcement Learning: This branch of AI combines deep learning with reinforcement learning, enabling agents to learn through trial and error in complex environments. Deep reinforcement learning holds promise for applications in robotics, game playing, and autonomous systems.

By staying curious and continuously learning, you can position yourself at the forefront of this dynamic field and contribute to shaping the future of deep learning.

Conclusion: Unlocking the Potential of Deep Learning

Deep learning with Python unlocks a universe of possibilities. From enabling machines to recognize objects and understand language to generating creative content and automating tasks, deep learning represents a transformative technology. By embarking on this journey, you equip yourself with the skills to not only harness the power of deep learning but also to contribute to its ethical and responsible development.