Ever wondered why some machine learning models work like magic while others flop? The secret lies in model complexity. It’s the hidden force that can make or break your AI projects. But what exactly is model complexity, and why should you care? Let’s unravel this mystery together and discover how it shapes the world of machine learning.

Understanding Model Complexity

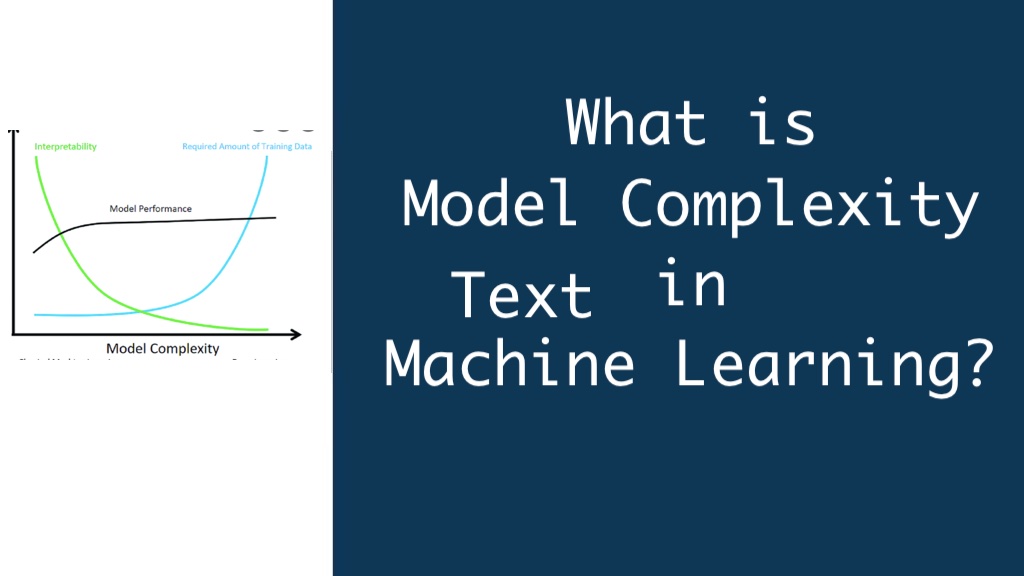

Model complexity refers to how sophisticated a machine learning algorithm is. It’s about the number of parameters and the intricacy of relationships the model can capture. Think of it as the brain power of your AI.

A simple model might only look at a few features, while a complex one could analyze thousands. But more isn’t always better. The trick is finding the right balance.

The Bias-Variance Tradeoff

At the heart of model complexity lies the bias-variance tradeoff. It’s a fundamental concept in machine learning that every data scientist should grasp.

Bias is the error from wrong assumptions in the learning algorithm. High bias can cause a model to miss relevant relations between features and outputs.

Variance is the error from sensitivity to small fluctuations in the training set. High variance can cause overfitting, where the model learns the noise in the training data too well.

The goal is to find the sweet spot between bias and variance. This balance is key to creating models that generalize well to new data.

Overfitting vs Underfitting

Overfitting happens when a model is too complex for the data. It’s like memorizing the answers instead of understanding the concept.

Signs of overfitting include:

- Perfect performance on training data

- Poor performance on new, unseen data

- The model captures noise, not just the underlying pattern

Underfitting, on the other hand, occurs when a model is too simple. It’s like trying to solve calculus with basic arithmetic.

Symptoms of underfitting are:

- Poor performance on both training and test data

- The model fails to capture important patterns

- High bias in predictions

Measuring Model Complexity

How do we quantify model complexity? There are several ways:

Number of Parameters

The most straightforward measure is counting the model’s parameters. More parameters generally mean higher complexity.

For example, in a linear regression model, each feature adds a parameter. In neural networks, it’s the number of weights and biases.

Vapnik-Chervonenkis (VC) Dimension

The VC dimension is a more theoretical measure. It represents the capacity of a model to fit different datasets.

A higher VC dimension indicates a more complex model. However, this measure is often difficult to calculate in practice.

Algorithmic Complexity

This refers to the computational resources required to run the model. Complex models often need more processing power and memory.

Regularization: Taming Complexity

Regularization is a powerful technique to control model complexity. It adds a penalty for complexity to the model’s loss function.

Common regularization methods include:

L1 Regularization (Lasso)

L1 regularization adds the absolute value of the coefficients to the loss function. This can lead to sparse models by driving some coefficients to zero.

L2 Regularization (Ridge)

L2 regularization adds the squared magnitude of coefficients. It prevents any single feature from having too much influence.

Elastic Net

Elastic Net combines L1 and L2 regularization. It offers a balance between feature selection and coefficient shrinkage.

Model Selection Techniques

Choosing the right model complexity is crucial. Here are some techniques to help:

Cross-Validation

Cross-validation helps estimate how well a model will perform on unseen data. It involves splitting the data into subsets, training on some, and validating on others.

Information Criteria

Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC) balance model fit with complexity. They penalize more complex models to prevent overfitting.

Learning Curves

Learning curves show how model performance changes with increasing data. They can help identify if a model is overfitting or underfitting.

The Role of Data in Model Complexity

The amount and quality of data significantly impact model complexity:

- More data generally allows for more complex models

- High-quality, diverse data can support more sophisticated algorithms

- Limited data requires simpler models to avoid overfitting

Feature Engineering and Model Complexity

Feature engineering can dramatically affect model complexity. Creating the right features can sometimes allow simpler models to perform well.

Techniques like Principal Component Analysis (PCA) can reduce dimensionality, simplifying the model while retaining important information.

Model Complexity in Different Algorithms

Let’s look at how complexity manifests in various machine learning algorithms:

Linear Models

In linear models, complexity is often related to the number of features. More features mean higher complexity.

Decision Trees

For decision trees, complexity is linked to the depth of the tree and the number of splits. Deeper trees with more splits are more complex.

Neural Networks

Neural network complexity depends on the number of layers, neurons per layer, and types of connections. Deep networks with many neurons are highly complex.

Support Vector Machines (SVM)

In SVMs, the choice of kernel and its parameters affects complexity. More flexible kernels like RBF can create more complex decision boundaries.

The Future of Model Complexity

As machine learning evolves, so does our understanding of model complexity:

- AutoML is making it easier to find the right model complexity automatically

- New architectures like transformers are pushing the boundaries of model size and complexity

- Research into model compression is finding ways to reduce complexity without sacrificing performance

Practical Tips for Managing Model Complexity

Here are some actionable tips for handling model complexity in your projects:

- Start simple and gradually increase complexity

- Use regularization to prevent overfitting

- Monitor training and validation performance

- Consider the interpretability-performance tradeoff

- Use domain knowledge to guide feature selection

- Experiment with different model architectures

Case Study: Model Complexity in Action

Let’s look at a real-world example. Imagine we’re predicting house prices:

We start with a simple linear regression using just square footage. The model underfits, missing important factors like location.

We add more features: bedrooms, bathrooms, location. The model improves, finding a good balance.

Then we use a deep neural network with hundreds of features. It performs perfectly on the training data but fails on new houses. Classic overfitting!

The solution? We settle on a moderately complex model with carefully chosen features and appropriate regularization.

Conclusion

Model complexity is a fundamental concept in machine learning. It’s the balancing act between creating models that are powerful enough to capture real patterns, yet simple enough to generalize well.

Remember, the goal isn’t to create the most complex model possible. It’s to find the right level of complexity for your specific problem and data.

As you continue your machine learning journey, keep model complexity in mind. It’s the key to creating AI that’s not just clever, but truly intelligent.