where algorithms are designed to learn and improve through experience, reinforcement learning (RL) emerges as a powerful paradigm. Unlike supervised learning, where the algorithm is spoon-fed labeled data, RL agents learn by interacting with an environment and receiving rewards for desirable actions. This article unveils the fundamentals of reinforcement learning and explores its implementation using Python, a popular programming language for data science and machine learning.

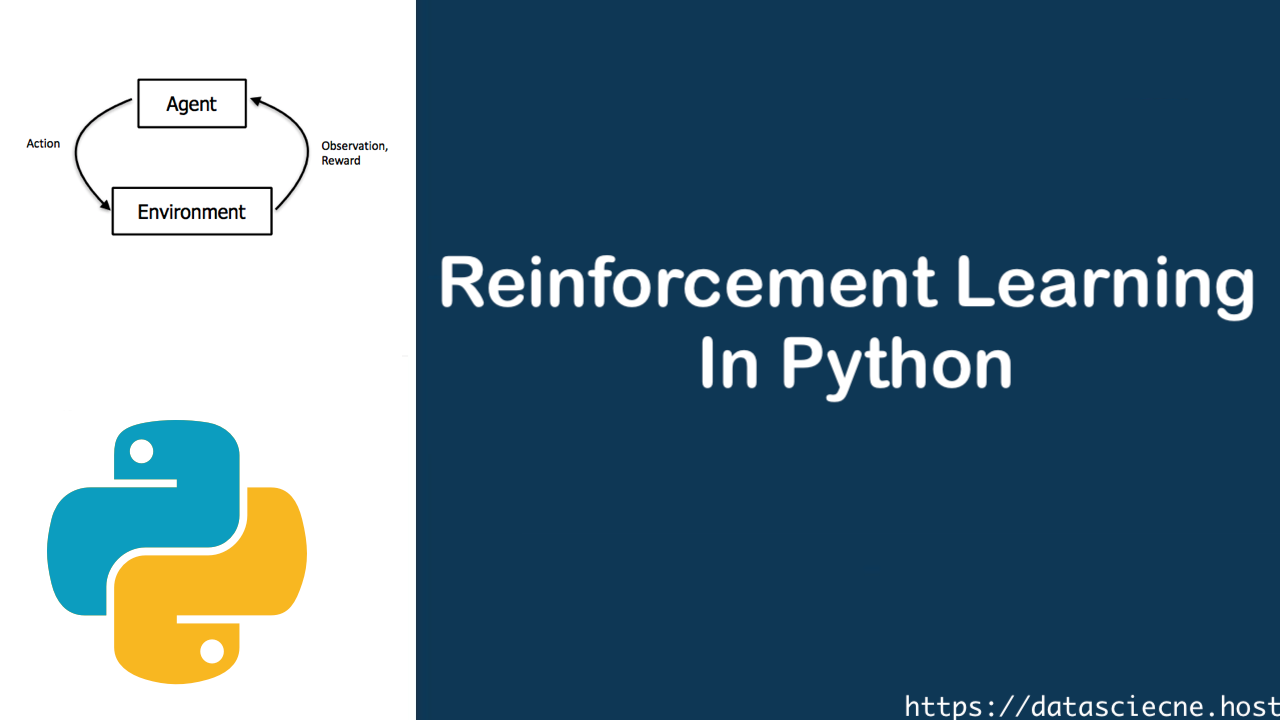

Reinforcement Learning Dance: Agent, Environment, Reward

Imagine a child learning to ride a bicycle. The child (agent) interacts with the environment (the bicycle and surroundings). Taking the correct actions (e.g., pedaling, steering) leads to positive rewards (maintaining balance, moving forward), while incorrect actions (e.g., losing balance, falling) result in negative rewards. Through trial and error, the child learns to optimize its actions to maximize the rewards – a classic example of reinforcement learning.

Key Components of the RL Ecosystem:

- Agent: The decision-maker within the environment, taking actions and learning from the consequences.

- Environment: The world the agent interacts with, providing feedback in the form of reward function.

- Action: The set of possible choices the agent can make within the environment.

- Reward: The feedback signal the agent receives for taking an action, indicating the desirability of that action.

- State: This captures the agent’s current situation within the environment, often represented as a set of relevant data points.

The Python Playground: Tools for Building RL Agents

Python offers a rich ecosystem of libraries for implementing reinforcement learning algorithms. Here are some popular choices:

- OpenAI Gym: This versatile toolkit provides a suite of environments for training and evaluating RL agents, encompassing classic control problems (e.g., balancing a cart-pole), robotic manipulation tasks, and atari games.

- Stable Baselines3: This library builds upon OpenAI Gym, offering pre-implemented and customizable RL algorithms, making it easier to experiment with various techniques.

- TensorFlow/Keras: While not exclusive to RL, these powerful deep learning libraries can be used to build neural network-based agents, particularly for complex environments or large state spaces.

- Stable Baselines3 (SB3): This library builds upon OpenAI Gym, offering a collection of pre-implemented RL algorithms, including Deep Q-Networks (DQN) and Proximal Policy Optimization (PPO). Think of SB3 as a treasure trove of pre-built tools, saving you time and effort in implementing core RL algorithms.

Getting Your Hands Dirty: A Simple RL Example

Let’s explore a basic reinforcement learning scenario using OpenAI Gym and Stable Baselines3:

- Import Libraries: Begin by importing necessary libraries like gym and stable_baselines3.

- Define the Environment: Select an environment from OpenAI Gym, For instance, you could choose the classic “CartPole-v1” environment, which simulates a pole balancing on a cart.

- Instantiate the Agent: Create an RL agent using Stable Baselines3. For the CartPole environment, a pre-built algorithm like PPO (Proximal Policy Optimization) might be suitable.

- Train the Agent: Train the agent by letting it interact with the environment, taking actions, receiving rewards, and learning to maximize its long-term reward (balancing the pole for as long as possible).

- Evaluate the Agent: Once trained, evaluate the agent’s performance by letting it run in the environment for a set number of episodes. Observe how well it can balance the pole without falling.

Getting Started with a Simple RL Example

Here’s a simplified example using OpenAI Gym to train an agent to navigate a maze environment and reach the goal:

import gym

# Create the environment

env = gym.make('CartPole-v1')

# Define the agent (replace with your chosen RL algorithm)

# ...

# Training loop

for episode in range(num_episodes):

# Reset the environment

state = env.reset()

# Interact with the environment until done

while True:

# Agent takes action based on current state

action = agent.choose_action(state)

# Take action, receive reward and new state

next_state, reward, done, info = env.step(action)

# Update the agent based on experience (reward, state transition)

# ...

# Update state for next iteration

state = next_state

# Exit loop if episode is finished

if done:

break

# Close the environment

env.close()This code snippet provides a basic structure for training an RL agent in Python. The specific implementation of the agent (choosing actions, updating based on cumulative rewards) would depend on the chosen RL algorithm.

Exploring Different RL Techniques

The realm of reinforcement learning encompasses a diverse set of algorithms, each with its strengths and weaknesses. Here are some prominent examples:

- Q-Learning: A value-based approach where the agent learns the expected future reward for taking an action in a given state.

- Deep Q-Networks (DQN): An extension of Q-Learning that leverages neural networks to handle complex state spaces.

- Policy Gradient Methods: These methods directly learn the policy (mapping from states to actions) by directly optimizing the expected reward.

The Applications of Reinforcement Learning

Reinforcement learning holds immense potential across various domains, including:

- Robotics: Training robots to perform complex tasks in dynamic environments.

- Game Playing: Developing AI agents that can learn and master complex games.

- Resource Management: Optimizing resource allocation and Markov decision processes.

By harnessing the power of Python and various RL libraries, you can embark on your own reinforcement learning journey, building intelligent agents that can interact with and learn from their environment. Remember, reinforcement learning is an active area of research, with new algorithms and applications emerging constantly. As you delve deeper, you’ll discover a vast and exciting playground for pushing the boundaries of artificial intelligence.

Experimentation and Practice:

Start with Simple Environments: Begin by experimenting with well-defined environments like CartPole or Atari games. This allows you to solidify your understanding of core RL concepts before tackling more complex scenarios.

Visualize Your Results: Utilize tools to visualize the agent’s learning process. Observing how the agent’s behavior changes over time can provide valuable insights into the effectiveness of your chosen RL algorithm.

Hyperparameter Tuning: The performance of RL algorithms is often sensitive to hyperparameter settings. Experiment with different hyperparameter values to optimize your agent’s learning.

Real-World Applications: As your expertise grows, consider exploring real-world applications of RL in Python. This could involve building an agent to optimize resource allocation in a simulation or even venturing into robotics control.

Resources and Tips for Further Exploration

As research in RL continues to evolve, Python is poised to remain a prominent platform for developing and deploying these powerful learning algorithms. With its extensive libraries, active community, and ease of use, Python equips you with a launchpad for your exploration of reinforcement learning. Here are some resources and tips to propel you further:

- OpenAI Gym Documentation: Dive deeper into the diverse environments offered by OpenAI Gym, familiarizing yourself with the different challenges your RL agents can tackle (https://www.gymlibrary.dev/).

- Stable Baselines3 Documentation: Unveil the inner workings of SB3’s pre-built algorithms and explore their customization options to fine-tune your RL models (https://github.com/DLR-RM/stable-baselines3).

- Online Courses and Tutorials: Numerous online platforms offer comprehensive courses and tutorials on RL using Python. Explore platforms like Coursera, Udacity, and Kaggle Learn to gain structured learning experiences.

- RL Experimentation Frameworks: Consider frameworks like Ray RLlib or Coach that streamline the development and experimentation process for RL algorithms, allowing you to focus on exploring different techniques and hyperparameters.

- Engage with the Community: Actively participate in online forums and communities dedicated to RL in Python. Engage in discussions, ask questions, and learn from the experiences of other practitioners (https://community.openai.com/).

- Real-World Projects: The best way to solidify your understanding is by tackling real-world projects. Start with smaller, controlled environments and gradually progress to more complex challenges. There are many open-source projects on platforms like GitHub that you can contribute to or use as inspiration for your own projects.

Advanced RL Concepts

As you delve deeper, here are some additional concepts to consider:

- Multi-agent Reinforcement Learning (MARL): This domain explores training multiple agents to cooperate or compete within an environment. Imagine training a team of agents to work together to achieve a common goal in a complex scenario.

- Hierarchical Reinforcement Learning: This approach decomposes complex tasks into subtasks, allowing the agent to learn policies for each level of the hierarchy. Think of it as breaking down a challenging task into smaller, more manageable steps for the agent to learn.

- Continuous Control: In contrast to discrete action spaces (limited set of choices), continuous control involves learning policies for actions with continuous values (e.g., robot arm movement). Imagine training an agent to control the throttle and steering of a car, requiring fine-tuned continuous actions.

A Final Note: The Power of Experimentation

The beauty of Python in RL lies in its versatility and ease of experimentation. Don’t be afraid to explore different libraries, environments, and algorithms. As you experiment, keep these key points in mind:

Start Simple: Begin with well-defined environments like CartPole before tackling more complex tasks.

Focus on the Problem: Clearly define the RL problem you’re trying to solve and choose the appropriate environment and algorithm.

Hyperparameter Tuning: Fine-tune the hyperparameters of your RL model to optimize its performance.

Visualize the Results: Use visualization tools to gain insights into the agent’s learning process and identify areas for improvement.

By harnessing the power of Python libraries, embracing experimentation, and continuously learning, you can unlock the vast potential of reinforcement learning and tackle challenging problems in various domains. So, dive into the world of RL with Python and embark on a rewarding journey of discovery!