Machine learning algorithms are revolutionizing numerous aspects of our lives, from medical diagnosis to fraud detection and spam filtering. However, despite their impressive capabilities, these algorithms are not infallible. One of the key challenges in evaluating their performance lies in understanding two critical concepts: false positives and false negatives. These terms, though seemingly straightforward, can have significant consequences depending on the application. This article delves into the world of false positives and false negatives, exploring their definitions, implications, and strategies for mitigating their impact on machine learning models.

Confusion Matrix: A Framework for Understanding Classification Errors

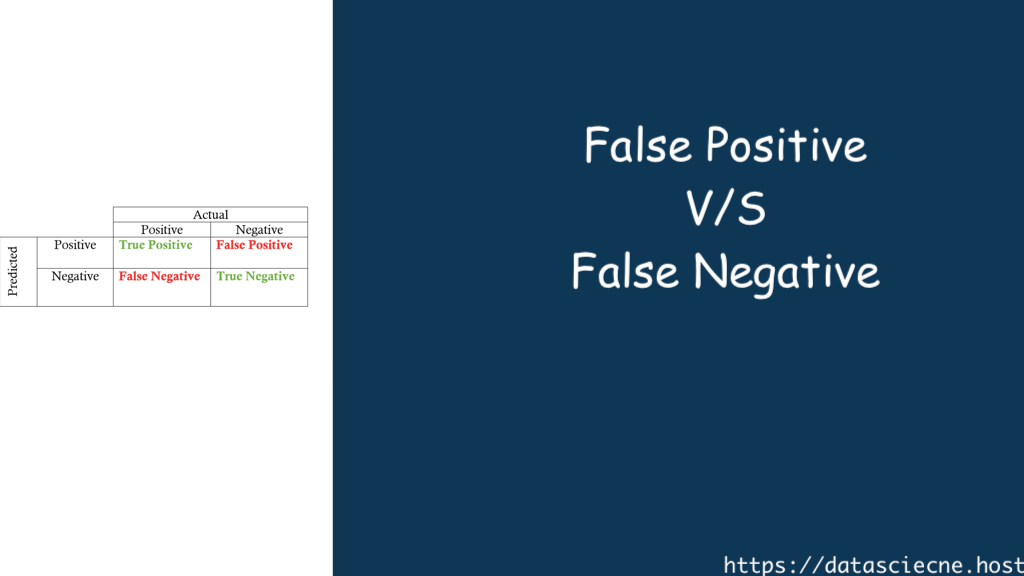

To understand false positives and negatives, we need to introduce the concept of a confusion matrix. This is a table that summarizes the performance of a classification model. It has two main dimensions:

- Predicted Class: This represents the class the model predicted for a given data point.

- Actual Class: This represents the true class of the data point.

The confusion matrix categorizes the results into four key areas:

- True Positives (TP): These represent instances where the model correctly predicted the positive class. For example, a spam filter correctly identifying a spam email as spam.

- True Negatives (TN): These represent instances where the model correctly predicted the negative class. In the spam filter example, correctly identifying a legitimate email as not spam falls under this category.

- False Positives (FP): These are the errors of commission. They occur when the model incorrectly predicts the positive class. Imagine the spam filter mistakenly classifying a legitimate email as spam. This can lead to important messages being missed.

- False Negatives (FN): These are the errors of omission. They occur when the model incorrectly predicts the negative class. A medical diagnosis system failing to detect a disease when it is present is a classic example of a false negative.

Understanding these four categories is crucial for evaluating the performance of a machine learning model.

False Positives: The Cost of Overzealous Predictions

False positives occur when a model incorrectly classifies a negative instance as positive. The consequences of these errors can vary significantly depending on the application. Here are some real-world examples:

- Spam Filtering: A high rate of false positives can lead to users missing important emails, impacting productivity and trust in the system.

- Facial Recognition: False positives can lead to misidentification and wrongful accusations, raising privacy concerns.

- Fraud Detection: An overzealous fraud detection system might flag legitimate transactions, causing inconvenience and frustration for customers.

The cost of false positives can be significant, leading to wasted resources, lost trust, and even potential harm.

False Negatives: The Peril of Missed Opportunities

False negatives occur when a model incorrectly classifies a positive instance as negative. These errors can be equally detrimental, particularly in scenarios where early detection is crucial. Here are some examples:

- Medical Diagnosis: A missed cancer diagnosis due to a false negative can have devastating consequences.

- Loan Approval: A loan application from a qualified borrower might be rejected due to a false negative in a credit risk assessment system.

- Security Intrusion Detection: A system that fails to detect a cyberattack due to false negatives can leave a network vulnerable.

The consequences of false negatives can range from financial losses to missed opportunities and even endangerment of lives.

Why Do False Positives and False Negatives Happen?

Several factors can contribute to these classification errors:

- Data Imbalance: When a dataset is heavily skewed towards one class, the model might struggle to learn the nuances of the minority class, leading to increased false negatives for that class. For instance, a fraud detection system trained primarily on legitimate transactions might miss fraudulent activities with less common patterns.

- Noisy Data: Data riddled with errors, inconsistencies, or outliers can confuse the model, leading to misclassifications. Imagine a spam filter trained on a dataset containing poorly formatted emails, increasing the chances of both false positives and false negatives.

- Model Complexity: Overly complex models can become prone to overfitting, where they learn the training data too well but fail to generalize to unseen data. This can result in both false positives and false negatives on new data points.

- Threshold Selection: In some classification tasks, a threshold is used to decide whether an instance belongs to a positive or negative class. Setting an inappropriate threshold can significantly impact the rate of false positives and negatives. For example, a credit card fraud system with a very low threshold might flag legitimate transactions as fraudulent (false positives), while a high threshold might miss actual fraud (false negatives).

Strategies to Reduce False Positives and False Negatives

Fortunately, several strategies can be employed to minimize the occurrence of these classification errors:

- Data Preprocessing: Cleaning data, handling missing values, and addressing outliers can significantly improve model performance and reduce errors.

- Data Augmentation: For imbalanced datasets, techniques like oversampling (replicating instances from the minority class) or undersampling (reducing instances from the majority class) can help balance the data and improve model performance on the minority class.

- Model Selection and Tuning: Choosing an appropriate model complexity and carefully tuning hyperparameters can help prevent overfitting and improve generalization. Techniques like cross-validation can help evaluate model performance on unseen data.

- Cost-Sensitive Learning: In some cases, assigning different costs to false positives and false negatives can guide the model towards minimizing the more critical error type. For instance, a fraud detection system might prioritize minimizing false negatives (missed fraud) even if it leads to some false positives (flagging legitimate transactions).

Mitigating the Impact: Strategies for Balancing False Positives and Negatives

The ideal scenario is to minimize both false positives and negatives. However, in many cases, there exists a trade-off between these two types of errors. Here are some strategies to achieve a balance:

- Adjusting the Threshold: Many classification models rely on a threshold to classify data points. Adjusting this threshold can influence the rate of false positives and negatives. Lowering the threshold might decrease false positives but increase false negatives, and vice versa. Choosing the right threshold depends on the specific application and the relative cost of each type of error.

- Cost-Sensitive Learning: Some machine learning algorithms can be trained with cost functions that take into account the relative cost of false positives and negatives. This allows the model to prioritize reducing the more critical error type.

- Ensemble Learning: Combining multiple models with different strengths and weaknesses can lead to a more robust system with a better balance between false positives and negatives.

Mitigating the Mistakes: Strategies for a More Accurate ML

Here are some strategies to reduce false positives and negatives:

- Data Quality: High-quality, well-labeled training data is the foundation for a robust ML model. Errors in the data can lead to misclassification.

- Choosing the Right Algorithm: Different algorithms have varying strengths and weaknesses. Selecting an algorithm suitable for the specific task can improve accuracy.

- Tuning Hyperparameters: Hyperparameters are settings within the model that influence its behavior. Optimizing these parameters can minimize errors.

- Cost-Sensitive Learning: In some situations, assigning different costs to false positives and negatives can guide the model towards the desired outcome.

- Ensemble Learning: Combining multiple models (ensemble learning) can often improve overall accuracy and reduce the risk of both false positives and negatives.

Evaluating the Trade-Off: Finding the Right Balance

It’s important to recognize that reducing one type of error often comes at the expense of increasing the other. For example, tightening a spam filter to catch more spam might also result in more legitimate emails being flagged. Finding the optimal balance between false positives and false negatives depends on the specific application and the relative costs associated with each error type.

Several evaluation metrics can help assess the performance of a classification model and guide the decision-making process. These include:

- Accuracy: The overall percentage of correctly classified instances.

- Precision: The proportion of positive predictions that are actually true positives.

- Recall: The proportion of actual positive instances that are correctly identified as positive.

- F1-Score: A harmonic mean of precision and recall, offering a balanced view of model performance.

By analyzing these metrics, data scientists can understand the trade-off between false positives and false negatives and make informed decisions about model configuration and deployment.

The Nuances of Classification Errors

The discussion so far has focused on binary classification tasks with two classes (positive and negative). However, the real world often presents scenarios with multiple classes. For instance, an image recognition system might categorize images as containing cats, dogs, or neither. In such cases, the concept of false positives and false negatives gets more nuanced.

- Multi-Class Classification Errors: When dealing with multiple classes, a false positive for one class can be a true positive for another. For example, an image recognition system might misclassify a picture of a lion as a tiger. This represents a false positive for tigers but a true positive for identifying a large feline (assuming there’s a “large feline” class).

- Confusion Matrix: A confusion matrix is a valuable tool for visualizing classification errors in multi-class scenarios. It shows how many instances were classified into each class compared to their actual class labels. This helps identify which classes are most prone to confusion and which types of errors (false positives for specific classes) are occurring most frequently.

- False Discovery Rate (FDR): This metric is particularly relevant in situations where positive predictions are scarce, and false positives can be misleading. It measures the proportion of positive predictions that are actually false positives.

- Type I and Type II Errors: These terms are statistically equivalent to false negatives and false positives, respectively. They are often used in hypothesis testing, where the goal is to avoid incorrectly rejecting a true hypothesis (Type I error) or failing to reject a false hypothesis (Type II error).

The Human Factor: The Role of Domain Expertise

Machine learning models are powerful tools, but they are not replacements for human expertise. Understanding the limitations of these models is crucial, particularly when dealing with high-stakes decisions.

- Explainability and Interpretability: In some domains, it’s essential to understand why a model makes a particular prediction. Techniques like LIME (Local Interpretable Model-Agnostic Explanations) can help explain model predictions, allowing human experts to assess the validity of the classification and potentially intervene if there’s a high risk of a false positive or negative.

- Human-in-the-Loop Systems: Combining machine learning predictions with human judgment can be a powerful approach. For instance, a fraud detection system might flag suspicious transactions, but a human analyst reviews the flagged cases to make the final decision, potentially mitigating false positives.

Mitigating Risk and Ensuring Responsible AI

Machine learning models are powerful tools, but they should not operate in a vacuum. Human oversight and intervention are crucial for mitigating the risks associated with classification errors. Here’s how:

- Domain Expertise: Involving domain experts in model development and evaluation ensures that the model is aligned with real-world needs and sensitivities.

- Explainable AI (XAI): Techniques like LIME (Local Interpretable Model-agnostic Explanations) can help understand why a model makes certain predictions, allowing humans to identify potential biases or errors.

- Transparency and Explainability: Communicating the limitations and potential errors associated with a model is essential for building trust and ensuring responsible AI deployment.

Conclusion: A Call for Responsible Machine Learning

Machine learning algorithms are revolutionizing numerous fields, but it’s vital to recognize that they are not perfect. False positives and false negatives are inherent to the classification process. By understanding these errors, their root causes, and strategies for mitigation, we can make informed decisions about deploying machine learning models responsibly. Furthermore, acknowledging the limitations of these models and integrating human expertise where necessary ensures that these powerful tools are used ethically and effectively, ultimately leading to better outcomes across various domains.