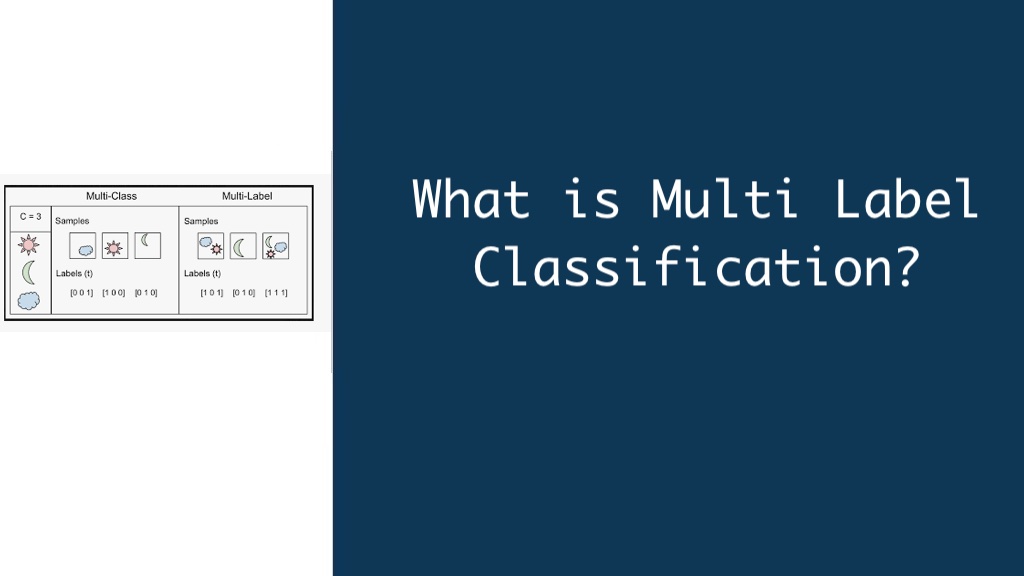

classification tasks often involve assigning a single label to an input. But what if a single label doesn’t do justice to the complexity of the data? This is where multi-label classification emerges as a powerful tool. It allows us to assign multiple relevant labels to a single data point, providing a richer and more nuanced understanding.

Imagine trying to categorize a news article. While a single-label approach might classify it as “sports,” multi-label classification could recognize it as “sports” and “business” if it discusses a sponsorship deal. This ability to assign multiple labels unlocks a wider range of applications.

How Does Multi-Label Classification Work?

Traditional classification algorithms are designed to output a single label. Multi-label classification tackles this challenge in a few ways:

- Adapting Existing Algorithms: Some techniques modify existing single-label classification algorithms to handle multiple labels. This might involve training a separate classifier for each possible label or using a more complex output layer in a neural network.

- Problem Transformation: Another approach involves transforming the multi-label problem into a series of binary classification tasks. For example, we could create a separate binary classifier for each label, essentially asking “Does this data point belong to category X?”

Building the Model: Training and Evaluation

Training a multi-label classification model follows similar principles to traditional classification, but with some key considerations:

- Data Preparation: The training data needs to be structured to reflect the multi-label nature of the problem. Each data point must be associated with a set of relevant labels.

- Evaluation Metrics: Metrics used for single-label classification, like accuracy, might not be sufficient for multi-label problems. Metrics like Hamming loss or precision-recall curves are better suited for evaluating the model’s performance.

Applications and Advantages of Multi-Label Classification

Multi-label classification finds applications in various domains:

- Text Classification: News articles can be categorized into multiple topics (politics, sports, entertainment), and product descriptions can be assigned labels for features and benefits.

- Image Recognition: Images can be tagged with multiple objects present (car, person, building) or even their attributes (sunny, outdoor, crowded).

- Bioinformatics: Protein functions can be predicted, where a single protein might be involved in multiple biological processes.

Here are some compelling advantages of multi-label classification:

- Richer Data Representation: By capturing the multifaceted nature of data, it provides a more nuanced understanding compared to single-label classification.

- Increased Explanatory Power: Multi-label models can reveal complex relationships between data points and multiple categories, leading to deeper insights.

- Flexibility: It readily adapts to situations where instances can belong to multiple classes, making it suitable for real-world data with inherent complexity.

Future of Multi-Label Classification

As research in machine learning continues to evolve, we can expect advancements in multi-label classification in several areas:

- Addressing Data Sparsity: Techniques like active learning and data augmentation can be leveraged to mitigate the challenges associated with limited training data.

- Developing Robust Evaluation Metrics: New metrics that capture the nuances of multi-label classification are being actively explored to provide a more comprehensive picture of model performance.

- Enhancing Model Interpretability: Research into explainable AI techniques can be applied to multi-label models, allowing for a clearer understanding of how these models arrive at their predictions.

Challenges and Considerations: The Road Ahead for Multi-Label Classification

While powerful, multi-label classification also presents certain challenges:

- Increased Model Complexity: Training models to handle multiple labels simultaneously can be computationally expensive and require careful design to avoid overfitting.

- Evaluation Metrics: Traditional classification metrics like accuracy might not be well-suited for multi-label tasks. New metrics like Hamming loss or precision-recall curves are often employed.

- Data Imbalance: Real-world data often exhibits imbalances between labels. Techniques to address class imbalance become crucial for effective multi-label learning.

Despite these challenges, advancements in deep learning architectures and robust evaluation metrics are paving the way for the continued development and adoption of multi-label classification. As this field progresses, we can expect even more innovative applications that leverage the power of assigning multiple, relevant labels to complex data.