linear algebra, eigenvalues and eigenvectors are a fundamental concept with applications in various fields, from data compression to physics. Eigenvectors, residing within the eigenspace of a matrix, represent special directions that undergo a specific scaling (eigenvalue) when transformed by the matrix. However, unearthing these eigenvectors can sometimes feel like an enigmatic task. This article delves into the methods for extracting eigenvectors from a matrix’s eigenspace, equipping you with the tools to navigate this mathematical landscape.

Eigenspace: A Sanctuary of Special Directions

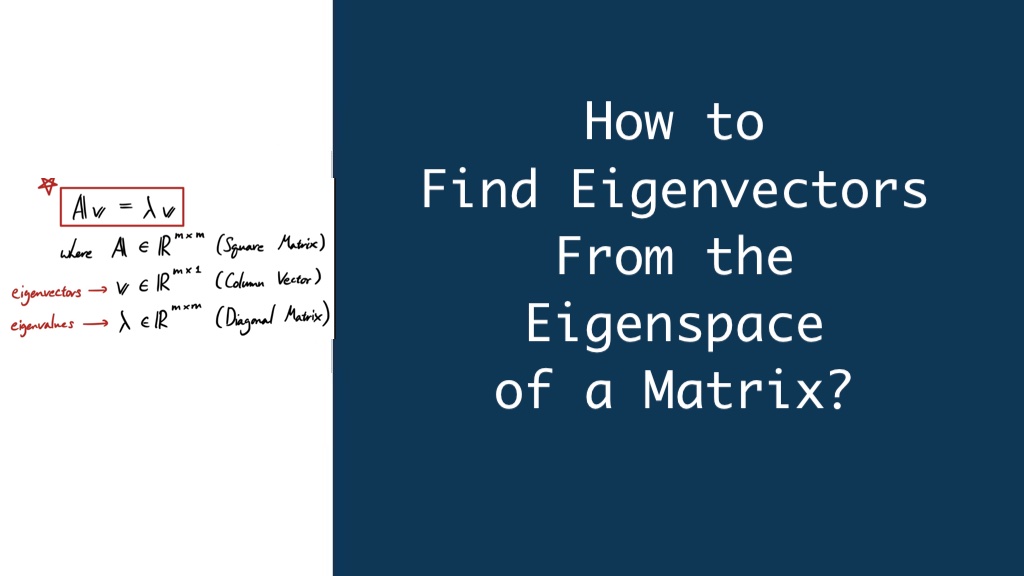

The eigenspace of a square matrix A, denoted by E(A), is a unique subspace that encompasses all non-zero vectors (v) that satisfy the following equation:

Av = λvHere, λ represents the eigenvalue associated with the eigenvector v. In simpler terms, when you multiply a matrix A by an eigenvector v, the resulting product is simply the eigenvector v scaled by the eigenvalue λ. The eigenspace essentially captures all these special directions within a matrix that undergo a specific scaling upon transformation.

However, the eigenspace itself doesn’t explicitly reveal the individual eigenvectors. To extract them, we need to delve a little deeper.

Eigenvectors: A Two-Step Approach

There are two primary methods for extracting eigenvectors from the eigenspace of a matrix:

1. Utilizing the Eigenvalue Equation:

This approach leverages the defining equation of the eigenspace, Av = λv. Here’s how it works:

- Step 1: Solve for the Eigenvalues: The first step is to determine the eigenvalues of the matrix A. You can achieve this by solving the characteristic equation, det(A – λI) = 0, where I represents the identity matrix. This equation will yield the eigenvalues (λ) associated with the matrix.

- Step 2: Solve for the Eigenvectors for Each Eigenvalue: Once you have the eigenvalues, you can solve the equation Av = λv for each eigenvalue (λ) separately. This typically involves transforming the equation into a system of linear equations and solving it for the unknown vector (v), which represents the eigenvector corresponding to the specific eigenvalue.

2. Null Space Method (For Degenerate Eigenspaces):

In some cases, a matrix might have a degenerate eigenspace, meaning there might be multiple eigenvectors associated with a single eigenvalue. In such scenarios, the traditional method (approach 1) might not yield all the eigenvectors. Here’s where the null space method comes into play:

- Step 1: Calculate the Eigenvalues: Similar to approach 1, you first need to determine the eigenvalues of the matrix A.

- Step 2: Construct the Matrix (A – λI): For each eigenvalue (λ), create a new matrix by subtracting the eigenvalue times the identity matrix (λI) from the original matrix A.

- Step 3: Find the Null Space: Calculate the null space of the newly formed matrix (A – λI). The null space of a matrix encompasses all vectors that, when multiplied by the matrix, result in the zero vector.

- Step 4: Extract the Eigenvectors: The vectors within the null space of (A – λI) represent the eigenvectors corresponding to the eigenvalue (λ).

Important Note: The null space method might yield more than one eigenvector for a specific eigenvalue, reflecting the degeneracy of the eigenspace.

Embarking on the Quest: Eigenvectors

Here’s a step-by-step approach to unearth eigenvectors from the eigenspace:

- Unearth the Eigenvalue: The first step is to find the eigenvalues of your matrix A. This can be achieved by solving the characteristic equation, det(A – λI) = 0, where I represents the identity matrix and det denotes the determinant. The solutions to this equation will be your eigenvalues.

- Construct the Eigenvector Equation: Once you have an eigenvalue λ, you can form the eigenvector equation: (A – λI)v = 0. Here, v represents the eigenvector you seek. This equation essentially states that when you multiply the matrix A by the eigenvector v and subtract the product of the eigenvalue λ and the identity matrix I from the result, you get a zero vector.

- Solve the System of Equations: The eigenvector equation (A – λI)v = 0 represents a system of linear equations. Solve this system for the unknown vector v. Depending on the size and complexity of the matrix, various techniques like Gaussian elimination or matrix inversion might be employed.

- Non-Trivial Solutions: An important caveat exists. The system of equations (A – λI)v = 0 might have infinitely many solutions if all entries in the resulting solution vector can be multiplied by a constant without affecting the outcome. This occurs when the determinant of (A – λI) is zero. However, for a valid eigenspace, we’re interested in non-trivial solutions – eigenvectors that are not simply the zero vector.

- Multiple Eigenvectors (Optional): A matrix can possess multiple eigenvectors corresponding to a single eigenvalue. In such cases, the eigenvectors will be linearly independent, meaning no eigenvector can be expressed as a linear combination of the others. This signifies that the eigenspace for that eigenvalue will have a dimension greater than one.

Utilizing Software Tools (Optional):

For larger matrices or complex calculations, leveraging software libraries like NumPy or SciPy in Python can significantly streamline the process of finding eigenvalues and eigenvectors. These libraries offer built-in functions like numpy.linalg.eig that efficiently compute both eigenvalues and eigenvectors.

Python in Action: A Practical Example

Let’s solidify our understanding with a practical example using Python’s NumPy library:

import numpy as np

# Define the matrix

A = np.array([[2, 1], [1, 2]])

# Find the eigenvalues

eigenvalues, eigenvectors = np.linalg.eig(A)

# Print the eigenvalues

print("Eigenvalues:", eigenvalues)

# Extract the eigenvectors (one for each eigenvalue)

for i in range(len(eigenvalues)):

eigenvector = eigenvectors[:, i]

print("Eigenvector for eigenvalue", eigenvalues[i], ":", eigenvector)This code snippet will calculate the eigenvalues and eigenvectors of the matrix A and print them for your reference.

Illuminating the Process Practical Example

Let’s illustrate the concept with a concrete example:

Consider the matrix A = [[1, 2], [2, 1]]

- Finding Eigenvalues: We need to solve the characteristic equation:det( A – λI) = 0This gives us (λ – 3)(λ – 1) = 0. Therefore, the eigenvalues are λ1 = 3 and λ2 = 1.

- Extracting Eigenvectors:

- For λ1 = 3: Substitute λ1 into A * v = λv. We get:[[1, 2], [2, 1]] * v = 3vSolving this system of equations, we might find a solution like v1 = [1, -1]. This is an eigenvector corresponding to λ1.

- For λ2 = 1: Substitute λ2 into A * v = λv. We get:[[1, 2], [2, 1]] * v = vSolving this system, we might find a solution like v2 = [2, 1]. This is another eigenvector corresponding to λ2 (remember, the eigenspace for a single eigenvalue can have multiple eigenvectors).

In this example, the eigenspace for λ1 = 3 might contain all vectors proportional to v1 = [1, -1], and the eigenspace for λ2 = 1 might contain all vectors proportional to v2 = [2, 1].

The Power of Eigenvectors: Applications

Eigenvectors and eigenspace hold significance in various domains beyond pure mathematics. Here are a few examples:

- Image Compression: In image processing, eigenvectors are used for techniques like Principal Component Analysis (PCA), which helps compress images by identifying the most significant variations in the data.

- Signal Processing: Eigenvectors play a role in signal filtering, where they assist in isolating specific frequencies within a signal.

- Quantum Mechanics: In quantum mechanics, eigenvectors represent the possible states of a quantum system, and eigenvalues correspond to the associated energies.

By mastering the art of finding eigenvectors from the eigenspace, you unlock a powerful toolset for various scientific and engineering applications. So, the next time you encounter a matrix, remember the hidden chamber of the eigenspace – a treasure trove of special vectors waiting to be discovered!

Conclusion: Unlocking the Secrets of the Eigenspace

By mastering the techniques outlined above, you’ll be well-equipped to extract eigenvectors from the eigenspace of any matrix. Remember, the eigenvalue equation and the null space method offer powerful tools for navigating this mathematical landscape. As you delve deeper into linear algebra, understanding eigenvectors will prove invaluable in various applications, from image compression to quantum mechanics. So, the next time you encounter an eigenspace, don’t hesitate to utilize these methods to unveil the hidden eigenvectors