Ever wondered how data scientists predict house prices or stock market trends? Ridge and Lasso regression are two powerful tools they use. These techniques help uncover hidden patterns in data, even when there are many variables at play. Let’s explore how these methods work and how you can use them in Python.

Understanding Regularization

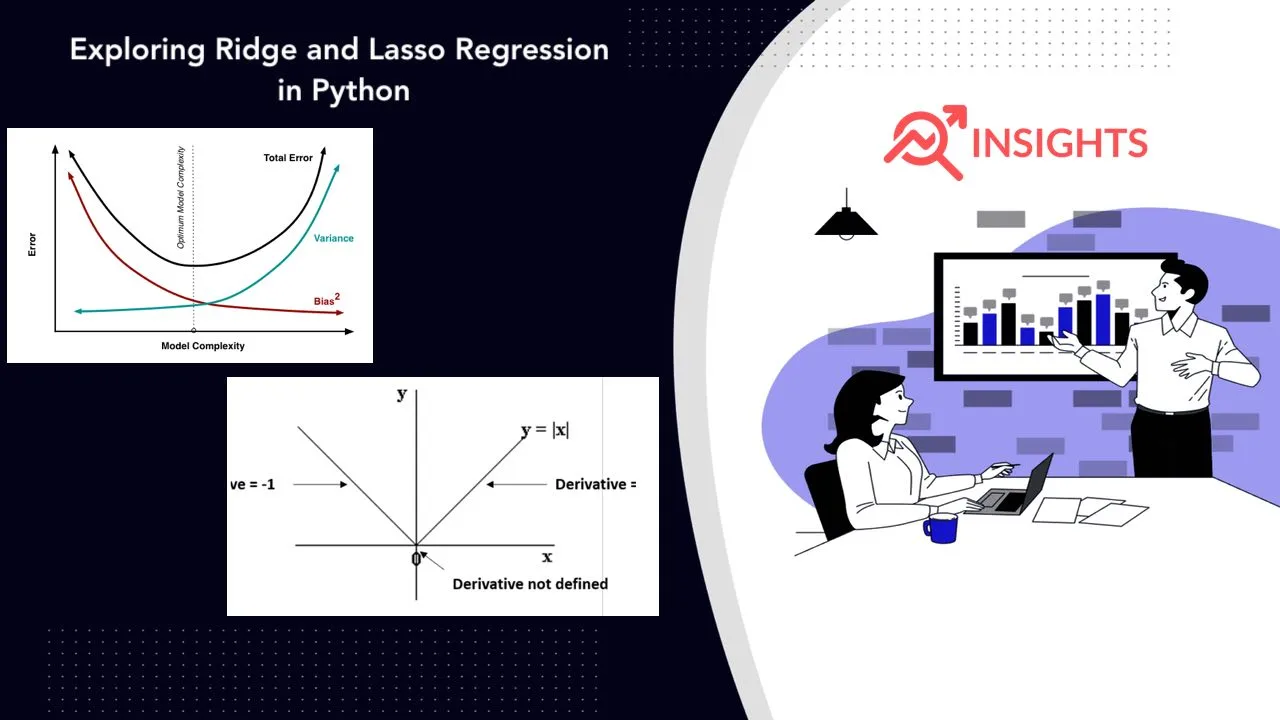

Before we dive into Ridge and Lasso, let’s talk about regularization. It’s a technique to prevent overfitting in machine learning models.

Overfitting happens when a model learns the training data too well. It captures noise along with the actual patterns. This leads to poor performance on new, unseen data.

Regularization adds a penalty term to the model’s loss function. This discourages the model from relying too heavily on any single feature.

Ridge Regression: L2 Regularization

Ridge regression, also known as L2 regularization, adds a squared magnitude of coefficient as penalty term to the loss function.

The formula for Ridge regression is:

Loss = OLS + α * (sum of squared coefficients)

Here, OLS is Ordinary Least Squares, and α is the regularization strength.

Ridge regression shrinks the coefficients of less important features towards zero. However, it never makes them exactly zero.

Lasso Regression: L1 Regularization

Lasso stands for Least Absolute Shrinkage and Selection Operator. It uses L1 regularization.

The formula for Lasso regression is:

Loss = OLS + α * (sum of absolute value of coefficients)

Lasso can shrink some coefficients to exactly zero. This makes it useful for feature selection.

Setting Up Your Python Environment

To get started, you’ll need to install some Python libraries. Open your terminal and run:

pip install numpy pandas scikit-learn matplotlib seaborn

These libraries will help us load data, build models, and visualize results.

Importing Necessary Libraries

Let’s start by importing the libraries we’ll need:

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.linear_model import Ridge, Lasso

from sklearn.metrics import mean_squared_error

import matplotlib.pyplot as plt

import seaborn as sns

Loading and Preparing the Data

For this example, we’ll use the Boston Housing dataset. It’s included in scikit-learn:

from sklearn.datasets import load_boston

boston = load_boston()

X = pd.DataFrame(boston.data, columns=boston.feature_names)

y = pd.Series(boston.target, name='PRICE')

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

We’ve split our data into training and test sets. This helps us evaluate our models fairly.

Implementing Ridge Regression

Now, let’s implement Ridge regression:

ridge = Ridge(alpha=1.0)

ridge.fit(X_train, y_train)

y_pred_ridge = ridge.predict(X_test)

mse_ridge = mean_squared_error(y_test, y_pred_ridge)

print(f"Ridge MSE: {mse_ridge}")

The alpha parameter controls the strength of regularization. A higher alpha means stronger regularization.

Implementing Lasso Regression

Similarly, we can implement Lasso regression:

lasso = Lasso(alpha=1.0)

lasso.fit(X_train, y_train)

y_pred_lasso = lasso.predict(X_test)

mse_lasso = mean_squared_error(y_test, y_pred_lasso)

print(f"Lasso MSE: {mse_lasso}")

Again, alpha controls the strength of regularization.

Comparing Ridge and Lasso Coefficients

Let’s compare how Ridge and Lasso affect the coefficients:

coef_comparison = pd.DataFrame({

'Feature': X.columns,

'Ridge': ridge.coef_,

'Lasso': lasso.coef_

})

plt.figure(figsize=(12, 6))

sns.barplot(x='Feature', y='Ridge', data=coef_comparison, color='blue', alpha=0.5, label='Ridge')

sns.barplot(x='Feature', y='Lasso', data=coef_comparison, color='red', alpha=0.5, label='Lasso')

plt.xticks(rotation=90)

plt.legend()

plt.title('Comparison of Ridge and Lasso Coefficients')

plt.tight_layout()

plt.show()

This plot shows how each method affects the feature coefficients differently.

Tuning the Alpha Parameter

The alpha parameter is crucial for both Ridge and Lasso. Let’s see how different alpha values affect the models:

alphas = [0.1, 1, 10, 100]

ridge_scores = []

lasso_scores = []

for alpha in alphas:

ridge = Ridge(alpha=alpha)

ridge.fit(X_train, y_train)

ridge_scores.append(mean_squared_error(y_test, ridge.predict(X_test)))

lasso = Lasso(alpha=alpha)

lasso.fit(X_train, y_train)

lasso_scores.append(mean_squared_error(y_test, lasso.predict(X_test)))

plt.plot(alphas, ridge_scores, label='Ridge')

plt.plot(alphas, lasso_scores, label='Lasso')

plt.xscale('log')

plt.xlabel('Alpha')

plt.ylabel('Mean Squared Error')

plt.legend()

plt.title('MSE vs Alpha for Ridge and Lasso')

plt.show()

This plot helps us choose the best alpha for each method.

Feature Selection with Lasso

Lasso can perform feature selection by setting some coefficients to zero. Let’s see which features it selects:

lasso = Lasso(alpha=1.0)

lasso.fit(X_train, y_train)

selected_features = X.columns[lasso.coef_ != 0]

print("Selected features:", selected_features)

These are the features Lasso considers most important for predicting house prices.

Cross-Validation for Model Selection

We can use cross-validation to choose between Ridge and Lasso:

from sklearn.model_selection import cross_val_score

ridge_cv = cross_val_score(Ridge(alpha=1.0), X, y, cv=5)

lasso_cv = cross_val_score(Lasso(alpha=1.0), X, y, cv=5)

print(f"Ridge CV Score: {ridge_cv.mean()}")

print(f"Lasso CV Score: {lasso_cv.mean()}")

The method with the higher cross-validation score is generally preferred.

Handling Multicollinearity

Both Ridge and Lasso can help with multicollinearity. This is when features are highly correlated with each other.

Let’s check for multicollinearity in our dataset:

correlation_matrix = X.corr()

plt.figure(figsize=(12, 10))

sns.heatmap(correlation_matrix, annot=True, cmap='coolwarm')

plt.title('Correlation Matrix of Features')

plt.show()

Ridge and Lasso can help reduce the impact of these correlations on our model.

Interpreting the Results

When interpreting Ridge and Lasso results, remember:

- Ridge shrinks all coefficients but doesn’t eliminate any.

- Lasso can eliminate less important features entirely.

- The magnitude of coefficients shows feature importance.

- A lower MSE indicates better model performance.

When to Use Ridge vs Lasso

Choose Ridge when:

- You want to keep all features.

- You suspect many features are important.

Choose Lasso when:

- You want to perform feature selection.

- You believe only a few features are important.

Elastic Net: Combining Ridge and Lasso

Elastic Net combines Ridge and Lasso regularization. It’s useful when you want a balance between the two:

from sklearn.linear_model import ElasticNet

elastic = ElasticNet(alpha=1.0, l1_ratio=0.5)

elastic.fit(X_train, y_train)

y_pred_elastic = elastic.predict(X_test)

mse_elastic = mean_squared_error(y_test, y_pred_elastic)

print(f"Elastic Net MSE: {mse_elastic}")

The l1_ratio parameter controls the mix of L1 and L2 regularization.

Scaling Features for Better Performance

Scaling features can improve the performance of Ridge and Lasso:

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

X_train_scaled, X_test_scaled, y_train, y_test = train_test_split(X_scaled, y, test_size=0.2, random_state=42)

ridge_scaled = Ridge(alpha=1.0)

ridge_scaled.fit(X_train_scaled, y_train)

lasso_scaled = Lasso(alpha=1.0)

lasso_scaled.fit(X_train_scaled, y_train)

print(f"Ridge MSE (scaled): {mean_squared_error(y_test, ridge_scaled.predict(X_test_scaled))}")

print(f"Lasso MSE (scaled): {mean_squared_error(y_test, lasso_scaled.predict(X_test_scaled))}")

in above code Scaling ensures all features contribute equally to the regularization penalty.

Conclusion

Ridge and Lasso regression are powerful tools for handling complex datasets. They help prevent overfitting and can improve model performance. Ridge is great when you want to keep all features, while Lasso excels at feature selection.

Remember, the choice between Ridge and Lasso often depends on your specific dataset and problem. Experiment with both methods and use techniques like cross-validation to find the best approach for your data.

As you continue your journey in data science, you might want to explore more Advanced Regression Techniques in Python. For time-dependent data, Time Series Regression in Python offers specialized methods. And for a broader overview, check out our guide on Regression in Python.