machine learning, where algorithms grapple with making accurate predictions, terminology plays a crucial role. Understanding the terms “true positive” and “true negative” is fundamental to evaluating the performance of classification models. These concepts lie at the heart of a concept known as the confusion matrix, a vital tool for assessing a model’s ability to correctly classify data points.

Setting the Stage: Classification in Machine Learning

Imagine a machine learning model tasked with differentiating between cat and dog images. The model analyzes features like fur patterns, facial structure, and posture to classify each image. Here’s where true positives and true negatives come into play:

- Positive Class: This represents the category the model is trying to identify (e.g., cats in our example).

- Negative Class: This encompasses all categories other than the positive class (e.g., dogs in our example).

True Positives and True Negatives

True Positive (TP): This scenario represents a resounding success for the model. It correctly identifies an instance belonging to the positive class. In our example, a true positive would be the model accurately classifying an image containing a cat as a “cat.”

True Negative (TN): Here, the model triumphs by correctly classifying an instance that does not belong to the positive class. Returning to our cat and dog example, a true negative would be the model accurately classifying an image containing a dog as a “dog” (not a cat).

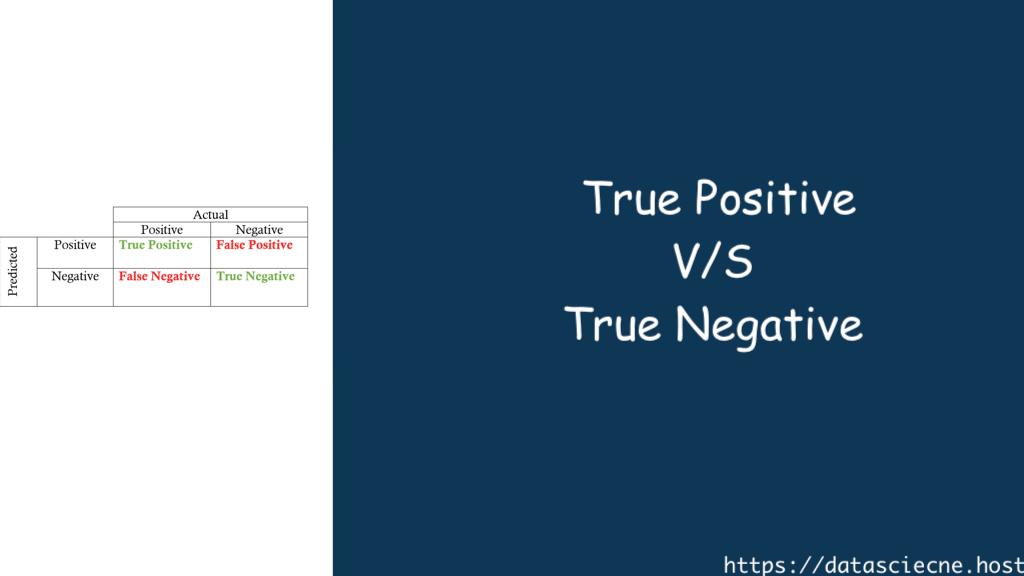

Visualizing Success: The Confusion Matrix

The confusion matrix is a powerful tool that summarizes the performance of a classification model. It’s a square table with rows and columns representing the actual and predicted classes:

| Predicted Class | Positive | Negative |

|---|---|---|

| Actual Positive | True Positive (TP) | False Negative (FN) |

| Actual Negative | False Positive (FP) | True Negative (TN) |

- False Positive (FP): This outcome signifies an error by the model. It incorrectly classifies an instance that doesn’t belong to the positive class as positive. In our example, a false positive would be the model mistakenly classifying a dog image as a “cat.”

- False Negative (FN): This scenario represents another form of error. The model misses the mark by classifying an instance that truly belongs to the positive class as negative. Continuing with the cat and dog example, a false negative would be the model incorrectly classifying a cat image as a “dog.”

Understanding these concepts is essential because they form the foundation of various performance metrics used to evaluate the efficacy of classification models. Here’s a table summarizing true positives and true negatives alongside their counterparts:

| Scenario | Description |

|---|---|

| True Positive (TP) | Test result is positive, and the disease is present. |

| True Negative (TN) | Test result is negative, and the disease is absent. |

| False Positive (FP) | Test result is positive, but the disease is absent (Type I Error). |

| False Negative (FN) | Test result is negative, but the disease is present (Type II Error) |

Why These Terms Matter: Evaluating Model Performance

By analyzing the counts within the confusion matrix, particularly the true positives and true negatives, we can gauge the effectiveness of a classification model. Here’s how:

- High True Positives and True Negatives: This indicates a well-performing model that accurately identifies both positive and negative instances.

- Low True Positives or True Negatives: This suggests potential issues with the model. Low true positives might indicate the model struggles to identify the positive class, while low true negatives might suggest difficulty in correctly classifying instances outside the positive class.

Importance of True Positives and True Negatives in Performance Metrics

By analyzing the number of true positives, true negatives, false positives, and false negatives, data scientists can calculate various performance metrics to assess the effectiveness of a classification model. Here are some key metrics that leverage these concepts:

- Accuracy: This metric simply represents the proportion of correctly classified cases (both true positives and true negatives) out of all the test cases.

- Precision: This metric measures the proportion of true positives among all positive test results (including both true positives and false positives). A high precision indicates that the model is good at identifying actual positives and not flagging healthy individuals as diseased.

- Recall: Also known as sensitivity, recall measures the proportion of true positives out of all actual positive cases (including both true positives and false negatives). A high recall indicates that the model is effective at capturing most of the true disease cases.

- F1-Score: This metric provides a harmonic mean of precision and recall, offering a balanced view of both metrics.

Applications of True Positives and True Negatives

These concepts extend beyond image classification and permeate various machine learning domains:

- Spam Filtering: A spam filter aims to identify spam emails (positive class) and categorize legitimate emails (negative class). True positives would be emails correctly classified as spam, while true negatives would be legitimate emails accurately identified as non-spam.

- Medical Diagnosis: Machine learning models can assist in medical diagnosis tasks. Here, a positive class might represent a specific disease, and true positives would be cases where the model correctly identifies the disease, while true negatives would be instances where the model accurately identifies the absence of the disease.

Example: Spam Filter Performance

Consider a spam filter that aims to categorize emails as spam or not spam. Here’s how true positives and true negatives translate in this context:

- True Positive: The filter correctly identifies a spam email and diverts it to the spam folder.

- True Negative: The filter correctly identifies a legitimate email and delivers it to the inbox.

However, the filter can also make mistakes:

- False Positive: The filter incorrectly identifies a legitimate email as spam, potentially leading to important messages being missed.

- False Negative: The filter incorrectly categorizes a spam email as legitimate, allowing it to infiltrate the inbox and potentially expose the user to phishing attempts or malware.

Understanding the interplay between true positives, true negatives, and the corresponding false positives and negatives empowers you to fine-tune your spam filter to achieve optimal performance.

The Final Word: Unlocking Insights

Understanding true positives and true negatives empowers you to effectively evaluate the performance of classification models. By analyzing these metrics alongside other evaluation metrics like precision and recall, you gain valuable insights into your model’s strengths and weaknesses. This knowledge paves the way for model refinement and ultimately, more accurate and reliable machine learning applications.